Feb 1, 2026

OpenClaw Security Risks: AI Assistants Under Attack in 2026

Jason Rebholz

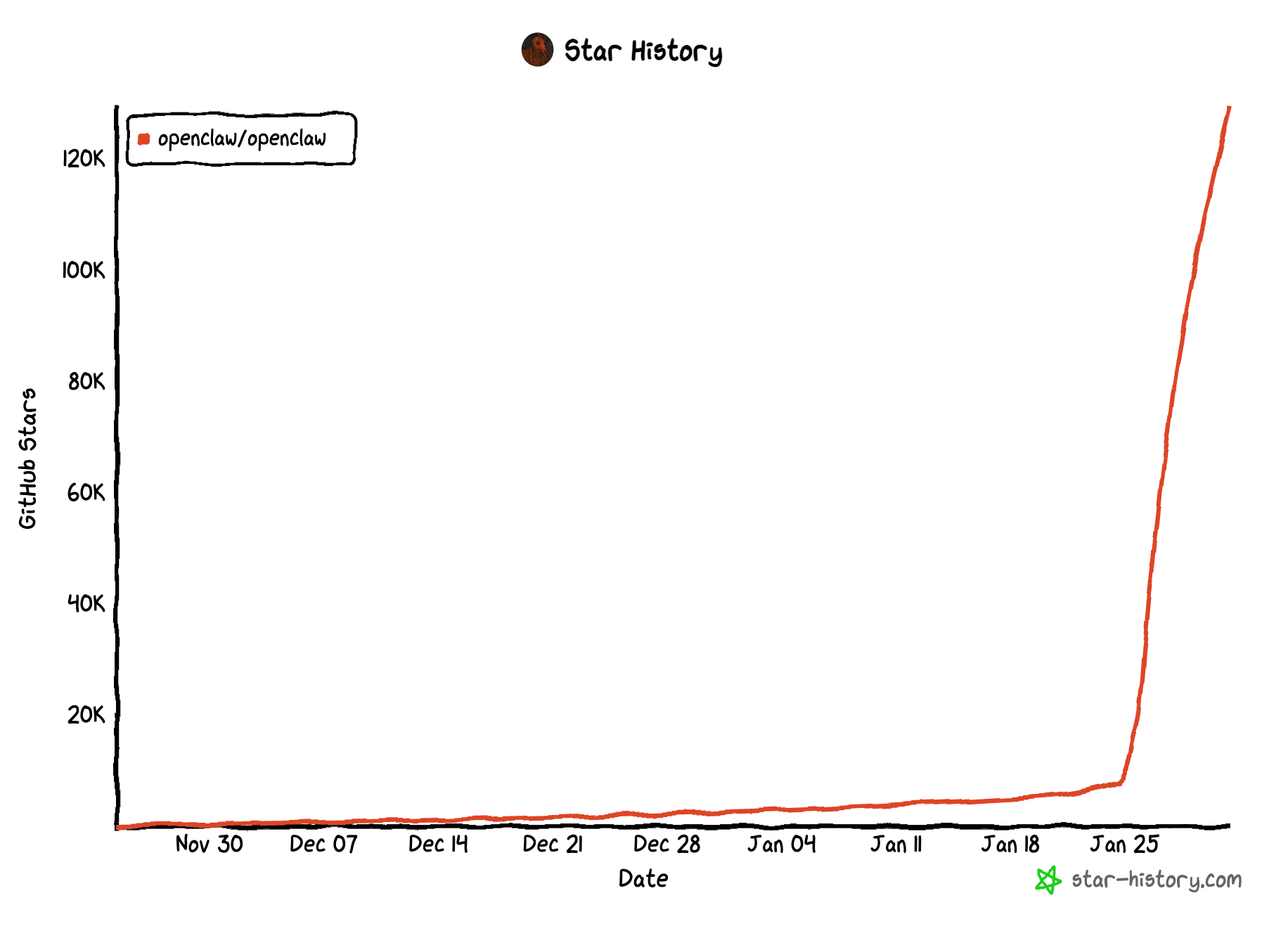

Why is it that every time something new and interesting in AI takes off, humans find a way to make it weird and creepy? In this latest saga, we have Clawdbot Moltbot, OpenClaw. Yeah, three names in the span of a week. It’s a personal AI assistant that is actually useful. In the last week, its popularity soared, reaching over 120K GitHub stars.

What is OpenClaw? There are already a ton of write-ups on this, so quite simply, it’s a personal AI assistant that you run locally and connect to your apps. Things like your email, browser, local system, and even smart home devices. Quite literally, it’s a conduit to your entire digital ecosystem. Based on how you set it up, it’s not just confined to your local system either. It can communicate with the outside world via your messenger apps (WhatsApp, Signal, Slack, Discord, etc.).

What’s even more terrifying is that it allows for skills. Just like Antropic’s Agent Skills, these are modular capabilities the agent can add. It’s like the agent learning a new trick. If you’re thinking supply chain risks, do I have a treat for you…

OpenClaw published its own threat model. It’s to the point. Your AI assistant can do anything you can, and people can trick it into doing bad things using all of the access you’ve given to OpenClaw.

That, of course, is just from the perspective of OpenClaw itself. When you look at the broader ecosystem of these agents that can independently download skills, update their own configuration files, and the overall lack of security coupled with a YOLO environment…well, yikes…

One threat it didn’t call out was what happened when people unleashed these things on the Internet and gave them a skill to socialize on the social network for AI Agents, moltbook. Since then, OpenClaw agents posted 43K posts with 232K comments, built an AI agent only Instagram, started a religion, and found a way to call it’s human.

Quick tangent on moltbook. It’s unhinged. There are plenty of posts about the random things agents are talking about, so I’ll spare you here. But, out of curiosity, I had Claude Cowork estimate the total cost of this social network. I didn’t verify the logic or math, so take these estimates with that in mind.

Claude used the following logic for its estimates:

155,000+ registered AI agents on the platform

~37,000 actively posting/commenting every 30 minutes

Rate limits: 1 post per 30 min, 50 comments per hour per agent

Most agents run Claude Opus (the default in OpenClaw/Moltbot)

Here’s what it found:

Per-agent cycle costs (assuming Claude Opus 4.5 at $5/M input, $25/M output). Per-user costs reportedly range from $10-50/month for light users to $50-500+/month for heavy users.

Usage Level | Input Tokens | Output Tokens | Cost per Cycle |

|---|---|---|---|

Light | 2,000 | 300 | ~$0.02 |

Moderate | 5,000 | 500 | ~$0.04 |

Heavy | 10,000 | 1,000 | ~$0.08 |

Platform-wide estimates (37,000 active agents, 48 cycles/day). Total ecosystem spending: $1-4 million per month on LLM API tokens across all Moltbook agents, with the most likely figure around $1.5-2.5 million/month given that many registered agents aren't continuously active.

Scenario | Daily Cost | Monthly Cost |

|---|---|---|

Light usage | ~$35,000 | ~$1 million |

Moderate usage | ~$70,000 | ~$2 million |

Heavy usage | ~$140,000 | ~$4 million |

And from an energy perspective, we’re looking at moltbook's using ~50,000-100,000 kWh/month, which is roughly equivalent to:

15-30 average US homes (annual usage)

One small data center rack running 24/7

~25-50 tons of CO₂ per month

Thanks, Claude, that will be all for now.

We’re reliving the Y2K security challenges again. Fashion from the early 2000s is coming back in style in 2026, and for some reason, it’s bringing the same security mistakes with it. Mistakes like placing systems directly on the Internet with no security or exposing firewall/VPN control panels to the Internet.

Here’s a sampling of security issues that are already happening and some to expect in the coming weeks…yes, weeks, not months or years.

Misconfigurations: When it comes to any technology, especially those that burst into popularity in the span of a week, you can bet people are going to misconfigure it. Like people who have the admin control panel exposed to the Internet. In an X post, Jamieson O’Reilly found several control panels set up with no authentication. Speaking of which, he also found that the moltbook is exposing its entire database to the Internet right now, leaking API keys that would allow an attacker to hijack any agent’s ability to post on moltbook.

Data Leakage: Remember how agents are really helpful but really dumb? They lack common sense and just want to please. Matvey Kukuy posted a screenshot on X showing a simple malicious instruction sent via email that OpenClaw executed when the user asked it to check their email. That malicious instruction was a bash script that exfiltrated SSH keys from the user’s system, all because the email instructed it. Now, depending on the model you’re using, it’s possible it would block this but that can be a roll of the dice.

Supply Chain Attacks: OpenClaw loves Skills. The same Skills that threat actors are backdooring. In an X post, Jamieson O’Reilly created a neutered backdoor skill and hosted it on ClawdHub, a public skill repository for OpenClaw. He inflated the download count to over 4K to make it the top downloaded skill and then watched as developers downloaded and ran it on their systems.

Credential Theft: Hudson Rock points out that OpenClaw stores credentials in plain text in local system files. That’s a single line of code change for infostealers to scoop up those credentials. When a victim unknowingly installs an infostealer, which always happens, the threat actor receives access to every application connected to OpenClaw.

Combo Attacks: We’re already seeing the combination of social engineering + supply chain attacks + infostealers. OpenSourceMalware published a blog post detailing how threat actors are publishing malicious OpenClaw skills that require downloading a file before starting the skill. That file, you guessed it, is an infostealer.

Where does this all lead us in the future? We need to stop thinking about AI security as preventing threat actors using creative language to bypass guardrails that don’t work in the first place. The era of “ignore all previous instructions” was a complete and utter distraction.

Instead, the issue we need to focus on malicious tasks. Those tasks may look normal. “Find me information on X.” “Download and install this file.” Instead of the user behind the scenes, it’s a threat actor using the victim’s agent as their own, directing it to perform reconnaissance on their behalf. Attackers don’t necessarily need to hack in. They just need to insert themselves into the agent’s task queue.

The concept of access needs to shift to intent and outcomes. We’ve been so hyper-focused on applying least privilege to agents that we forgot one glaring issue. Even agents with the least privilege are still useful to an attacker if they can insert themselves into the actions that the agent takes and the output it receives. It’s no different than an operating system or a SaaS account. Attackers compromise existing legitimate credentials and step in for the user, using the victim’s own access against them.

The new age of detection is contextual. It’s understanding the total context. This must include individual instructions an agent is executing, an entire agent’s session for larger tasks/objectives/projects, and full provenance of agent-to-agent collaborations.

The sweet spot is knowing every agent’s objective, how it aligns with the tasks it executes, and the outcomes of those tasks. This extends to the mapping between humans:agents and agents:agents.

We’re not talking signature. We’re talking continuous contextual behavioral analysis.